Near-Linear Lower Bound for Dimension Reduction in L1

Presenter

September 23, 2011

Keywords:

- probability theory

- quantitative geometry

- compressed sensing

- distortion

- embedding theorems

- dimensional reduction

MSC:

- 60Gxx

- 60-xx

- 46-xx

- 46Bxx

- 46B06

- 46B09

- 46B20

- 46B80

- 46B85

Abstract

Given a set of $n$ points in $\ell_1$, how many dimensions are needed to represent all pairwise distances within a specific distortion?

This dimension-distortion tradeoff question is well understood for the $\ell_2$ norm, where $O((\log n)/\epsilon^{2})$ dimensions suffice to achieve $1+\epsilon$ distortion. In sharp contrast, there is a significant gap between upper and lower bounds for dimension reduction in $\ell_1$. A recent result shows that distortion $1+\epsilon$ can be achieved with $n/\epsilon^{2}$ dimensions.

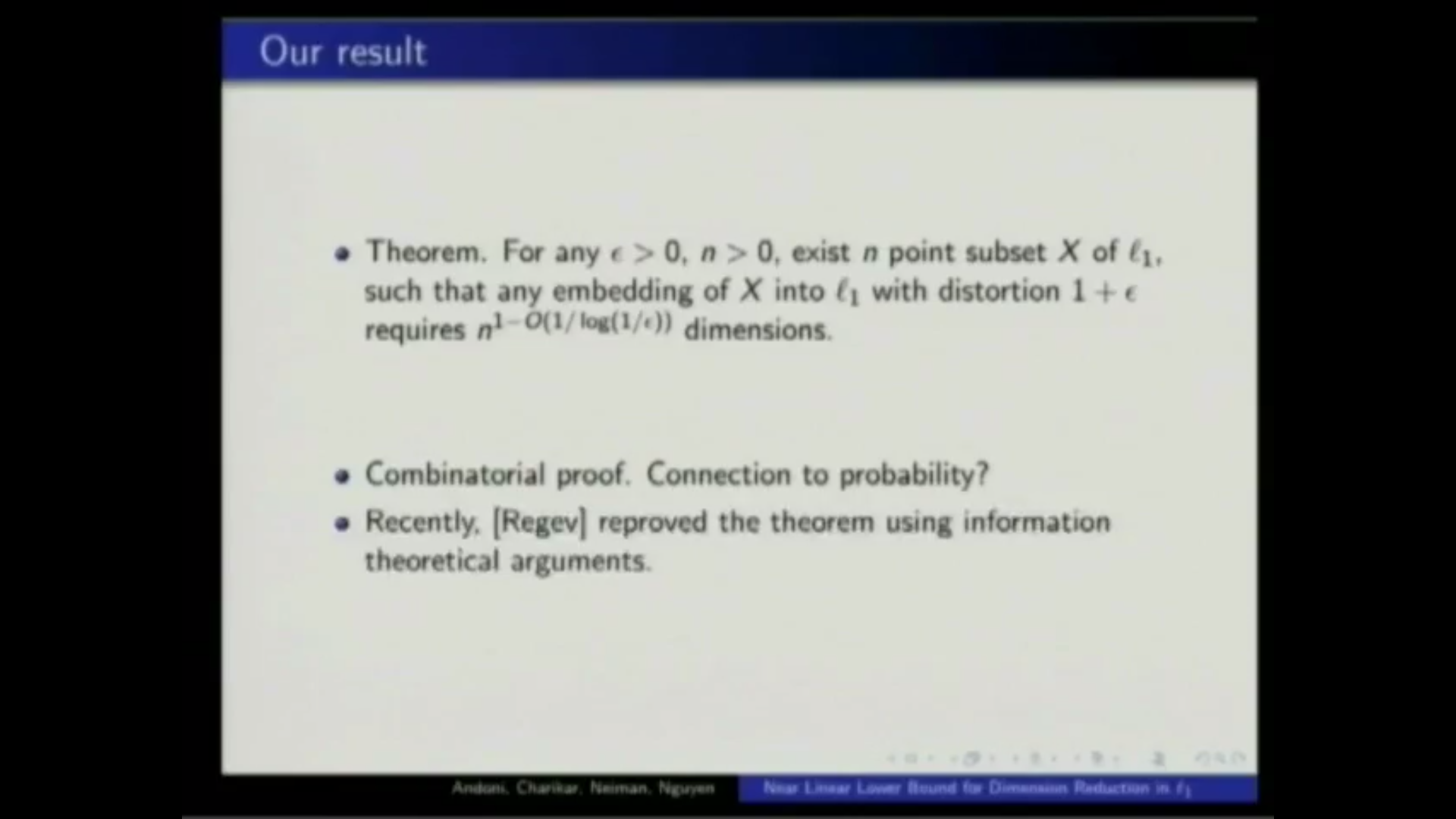

On the other hand, the only lower bounds known are that distortion $\delta$ requires $n^{\Omega(1/\delta^2)}$ dimensions and that distortion $1+\epsilon$ requires $n^{1/2-O(\epsilon \log(1/\epsilon))}$ dimensions. In this work, we show the first near linear lower bounds for dimension reduction in $\ell_1$. In particular, we show that $1+\epsilon$ distortion requires at least $n^{1-O(1/\log(1/\epsilon))}$ dimensions. Joint work with Moses Charikar, Ofer Neiman, and Huy L. Nguyen.