Greedy Algorithms for Structurally Constrained High Dimensional Problems

Presenter

March 27, 2012

Keywords:

- Higher dimensions

MSC:

- 32H30

Abstract

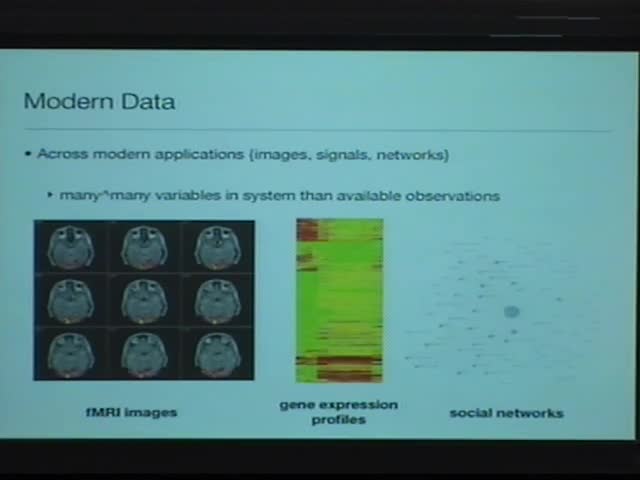

Modern problems across science and engineering increasingly require high-dimensional modeling: models with more parameters than observations. It is now well understood that statistically reliable inference is still possible under such high-dimensional settings, provided one restricts to constrained subclasses of models with particular low-dimensional structure. Examples include linear regression with sparsity constraints (compressed sensing), estimation of covariance or inverse covariance matrices, sparse principal component analysis, low-rank matrix estimation, and sparse additive non-parametric models. Over the past decade, there has been a strong body of work that have proposed statistical estimators for infering such structurally constrained high-dimensional models, with strong statistical guarantees.

In this talk, we consider the computational facet of such estimation: could one provide a general computational scheme to solve any of the convex optimization problems that arise in such high-dimensional inference? We find that such a general computational scheme is indeed possible: we discuss a scheme based on a greedy strategy. Our framework not only unifies existing greedy algorithms that have proposed for such high-dimensional problems by recovering them as special cases but also yields novel ones.

Based on joint work with Inderjit Dhillon and Ambuj Tewari.