Feature purification: How adversarial training can perform robust deep learning

Presenter

March 16, 2020

Abstract

To connect to the CSDM Seminar via Zoom, please do the following:

1 - If you have Zoom installed on your device, enter the following meeting ID: 360043913 , click Join Meeting.

2 - If you do not have Zoom installed, click the following link to download and install: https://theias.zoom.us/j/360043913

3 - Once installed, click the very same link to connect to the meeting.

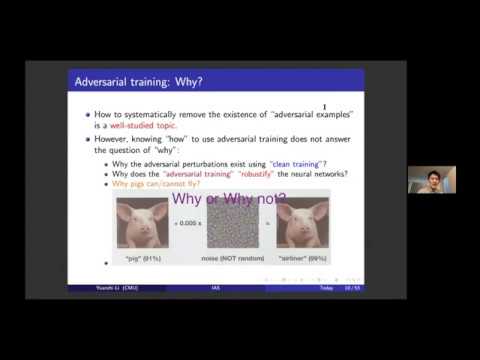

Why deep learning models, trained on many machine learning tasks, can obtain nearly perfect predictions of unseen data sampled from the same distribution but are extremely vulnerable to small perturbations of the input? How can adversarial training improve the robustness of the neural networks over such perturbations? In this work, we developed a new principle called "feature purification''. Mathematically, the principle states that for a multi-layer ReLU neural network, let w_i^0 be the weight of the i-th neuron at initialization, w_i be its weight after clean training (starting from w_i^0), and w_i' as its weight after adversarial training (starting from w_i), then for most of the neurons, there are scaling factors \beta_i and vectors v_i such that w_i = \beta_i w_i' + v_i with ||\beta_i w_i' || > ||v_i||, while both w_i and w_i' have nearly zero correlations with w_i^0.

Conceptually, the principle says that while both clean training and adversarial training will discover certain features fundamentally different from the initial values, clean training actually already discovers a big portion of the "robust features" obtained by adversarial training. Thus, instead of needing to learn new "robust features" or completely remove "non-robust features", adversarial training can simply robustify the neural network by purifying the clean trained features.

In this work, we present both experiments demonstrating the principle, and a mathematical model formally proving it. In particular, we show that for certain binary classification tasks, when we train a two-layer ReLU network using SGD, (1). Both clean training and adversarial training will learn features with nearly zero correlations with the (random) initialization. (2). After clean training, the network will provably achieve > 99% clean accuracy but 99% robust accuracy in polynomial samples and running time by simply "purifying" the clean trained features. Our lower bound that clean trained network has